This is the second of a two-part post. Part 1 showed how we used an event stream strategy to decouple our systems. This part presents some tips for implementing the strategy.

Get Organized

Don’t jump into tech before getting organized! Events are a shared resource, so it’s critical to get everyone on the same page.

Share schemas in a human readable document. Record the canonical event definitions in a shared document that supports versioned collaborative editing. I don’t recommend a code repo because some stakeholders are not programmers. It’s hard to beat the effort-to-results ratio of a shared document. Any confusion between event producers and event consumers is rapidly ironed out via comment threads.

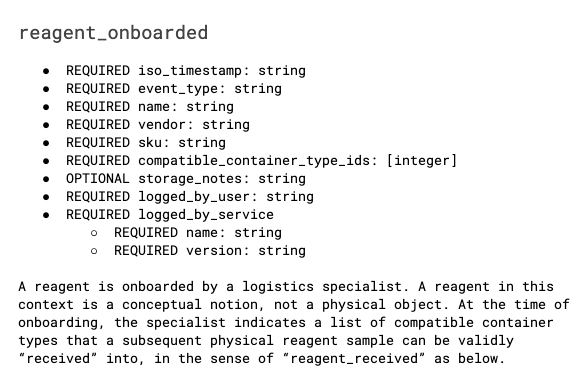

In the shared doc, our events look like this:

Some tips for this document:

- Try to use a notation that is maximally human-readable and yet still precise enough to translate into a machine-readable schema later.

- Try to keep the focus on content, not formatting. Simple bullet lists and courier font is preferable to fancy tables.

- Try to limit your schema to simple primitive types (strings and numerics) and simple collections like arrays. This makes the event more suitable for a wide audience of consumers.

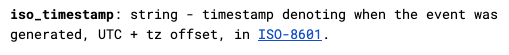

- The doc should define the format of specialized primitives to prevent misinterpretation. Any event that uses such types must follow the definition. In the example above,

iso_timestampis defined like so:

- Likewise, define global fields in the document. For example, the fields of

logged_by_serviceare defined by compliance rules. The definition should include links to supporting documents as needed. A global field can be required or optional; if present it must follow the definition. - Require that events include a description in plain English (or your human language of choice). You’ll catch many misunderstandings this way.

Treat events as a shared business language. You have a community of consumers and producers, including non-developers, that must speak this language. Make sure they engage with each other early and often. You may need to establish working groups, write glossaries, and have discussions about jargon and definitions. These are good exercises in any case, because they lead to deeper understanding of the business domain. Budget ample time for this coordination.

Event Design Tips

Use abstractions that don’t expose implementation. Design events at the company level, and try not to expose implementation details or team structure. For example, don’t use names that reference specific teams or services. Don’t write an event that can only be read (or written) by one service. Events should not be broken by service-level refactoring or team re-orgs.

Use machine-parsable names. I recommend that text intended to be machine-parsable (e.g. event names, field names, and enumerations) should be in all lowercase snake_case. Some serializers have trouble with dashes, so underscores are slightly safer. Use all lowercase to reduce the risk that, for example, one event uses the name RNAExtraction and another event uses RnaExtraction.

Use past-tense. By convention, since an event is a record of a past occurrence, they should be past-tense. Examples: sample_processed, user_registered.

Record the event type inside the event body. Otherwise, storing the body of the event into archives will lose the original type.

Don’t be afraid to add extra content. We are all taught to DRY out stuff, but you can relax that for events. Don’t relentlessly prune the event until it is just foreign keys. The goal is to decouple systems. We aren’t very decoupled if every consumer needs to call a bunch of services to interpret the event. Try to make life easy on consumers. On the other hand:

Don’t embed blobs, link to them. Events should not carry large static payloads such as pictures. This causes processing and storage problems. Instead, use links to suitable storage (e.g. S3).

Decide on your schema evolution strategy early. Schema evolution is a very complex topic that is beyond the scope of this post. You’ll need to decide how much complexity you need. Your evolution strategy will directly influence which serialization framework format you use. If your project permits, the simplest approach is the “only append; no breaking changes” strategy.

App logs are not events. App events such as errors, user-tracking, performance metrics, etc., should use traditional logging. They are not general business occurrences useful to a wide variety of consumers.

Commands are not events. A command, such as create_user, is a transactional request that can fail. Event streams are unsuitable for commands because there’s no direct response path back to the client. Implement commands using synchronous APIs. Tip: imperative phrasing (“create X”) is a hint that you might be looking at a command.

Async tasks aren’t events either. Your classic async task dispatching system looks kind of similar to event streaming; you are pushing items onto a “queue.” But like commands, tasks can fail, and there’s an implied response path back to the client. Also, a task can fail and be pushed back onto the queue, while event streams are never re-ordered.

Choose an event granularity that delivers the best effort-to-value for event consumers. Here’s a good heuristic:

- If this event were split into two events, would any consumer subscribe to one, but not the other? If the answer is yes, then consider splitting it.

- Is this event so narrow that it carries no useful business value by itself? If yes, eliminate it or merge it into a finalizing event.

For example, user_moved_mouse is too narrow, and user_clicked_ok lacks enough context. user_accepted_eula feels right.

Consider when to “batch” events. Batching is when you put multiple “mini-events” inside one event. For example, if an inspector approves a box of widgets, is that one event per widget, or one event per box? Consumers often prefer you to batch them up, and that’s usually OK if the mini-events are homogeneous.

Batching can improve usability and performance, but watch out for implementation problems due to large event sizes. Large events can cause memory and latency problems. For really large collections, store them elsewhere and put a link in the event.

If the events are not homogeneous, they should not be batched together. inspector_approved_box and inspector_went_to_lunch are different business occurrences and should be different events. Events should be cohesive.

Implementation Tips

Use a cross-language serialization framework, not raw JSON. To prevent garbage data, use a framework that can enforce and validate schemas. Prefer a industry-standard framework such as Thrift, Avro, or Protobuf to lower effort and maximize compatibility. Always check with consumers such as analytics and BI to make sure they can ingest the format.

Partition each event type into its own stream. This allows consumers to easily ignore events they don’t care about. Don’t force consumers to read an event just to determine whether they can ignore it.

Distinguish between “business timestamps” and “implementation timestamps.” A “business timestamp” denotes when the business occurrence happened. An “implementation timestamp” denotes when the event was generated by an implementation. These times can differ, so you may need both types in the same event.

Use client libraries for producers. Use client libraries to make sure that serialization is consistent, required fields are present, versioning is preserved, and so on. The more consistency you can enforce, the less chance of seeing bad data in your events. Client libraries for consumers are also helpful, but not existentially important.

Locate all machine-readable event schemas in one repo, and trigger tests. Despite your best efforts, someone may accidentally introduce a breaking change into a schema. To detect this early, schemas should be in one place, and merging should trigger compatibility tests. This may require a post-commit hook to trigger tests in different projects, depending on your repo organization.

Thanks for Reading!

That wraps it up for Part 2. I hope that these posts help demystify event streaming as a strategy, and gives you some useful pointers to get started.